Context

Reduction of child mortality is reflected in several of the United Nations’ Sustainable Development Goals and is a key indicator of human progress. The UN expects that by 2030, countries end preventable deaths of newborns and children under 5 years of age, with all countries aiming to reduce under‑5 mortality to at least as low as 25 per 1,000 live births.

Parallel to notion of child mortality is of course maternal mortality, which accounts for 295 000 deaths during and following pregnancy and childbirth (as of 2017). The vast majority of these deaths (94%) occurred in low-resource settings, and most could have been prevented.

In light of what was mentioned above, Cardiotocograms (CTGs) are a simple and cost accessible option to assess fetal health, allowing healthcare professionals to take action in order to prevent child and maternal mortality. The equipment itself works by sending ultrasound pulses and reading its response, thus shedding light on fetal heart rate (FHR), fetal movements, uterine contractions and more.

Project content

- Data Exploration and Visualization

- Data cleaning

- Data Transforming

- Modeling and Hyperparameter tunning

- Unsupervised training

- Evaluation

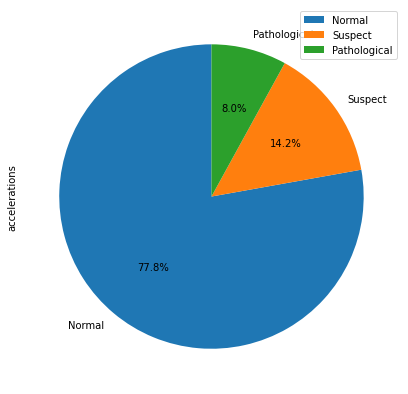

Target Variable

Let’s talk about our target variable, fetal health. For this project, fetal health was separated into 3 different categories.

- Normal

- Suspect

- Pathological

We will use other variables to try to predict in which category we would include the health of a baby never before seen by the algorithm. Given the importance of the prediction, we will set our goal to develop a tool that has at least a 90% accuracy.

Libraries and packages

import warnings

warnings.simplefilter(action ="ignore")

warnings.filterwarnings('ignore')

# Import the necessary packages

import numpy as np

import pandas as pd

# Data visualization

import matplotlib.pyplot as plt

import seaborn as sns

# Algorithms

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import GradientBoostingClassifier

from lightgbm import LGBMClassifier

from sklearn.model_selection import RandomizedSearchCV

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import classification_report

Let´s load the dataset

data = pd.read_csv('data/fetal_health.csv')

X = data.drop('fetal_health', axis=1)

y = data['fetal_health']

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=123)

train = X_train.join(y_train)

Data Exploration, Visualization and Analysis

Exploration

train.head(5)

| baseline value | accelerations | fetal_movement | uterine_contractions | light_decelerations | severe_decelerations | prolongued_decelerations | abnormal_short_term_variability | mean_value_of_short_term_variability | percentage_of_time_with_abnormal_long_term_variability | ... | histogram_min | histogram_max | histogram_number_of_peaks | histogram_number_of_zeroes | histogram_mode | histogram_mean | histogram_median | histogram_variance | histogram_tendency | fetal_health | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 678 | 140.0 | 0.010 | 0.000 | 0.000 | 0.000 | 0.0 | 0.0 | 58.0 | 1.2 | 0.0 | ... | 62.0 | 188.0 | 4.0 | 0.0 | 176.0 | 164.0 | 169.0 | 27.0 | 1.0 | 1.0 |

| 512 | 154.0 | 0.003 | 0.003 | 0.003 | 0.000 | 0.0 | 0.0 | 56.0 | 0.6 | 1.0 | ... | 140.0 | 171.0 | 1.0 | 0.0 | 161.0 | 160.0 | 162.0 | 2.0 | 1.0 | 1.0 |

| 613 | 146.0 | 0.009 | 0.003 | 0.001 | 0.000 | 0.0 | 0.0 | 41.0 | 1.9 | 0.0 | ... | 50.0 | 180.0 | 7.0 | 0.0 | 154.0 | 152.0 | 155.0 | 18.0 | 1.0 | 1.0 |

| 831 | 152.0 | 0.000 | 0.000 | 0.002 | 0.002 | 0.0 | 0.0 | 61.0 | 0.5 | 61.0 | ... | 99.0 | 160.0 | 4.0 | 3.0 | 159.0 | 155.0 | 158.0 | 4.0 | 1.0 | 2.0 |

| 369 | 138.0 | 0.000 | 0.008 | 0.001 | 0.002 | 0.0 | 0.0 | 64.0 | 0.4 | 30.0 | ... | 118.0 | 159.0 | 2.0 | 0.0 | 144.0 | 143.0 | 145.0 | 5.0 | 0.0 | 2.0 |

5 rows × 22 columns

train.info()

<class 'pandas.core.frame.DataFrame'>

Int64Index: 1488 entries, 678 to 1346

Data columns (total 22 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 baseline value 1488 non-null float64

1 accelerations 1488 non-null float64

2 fetal_movement 1488 non-null float64

3 uterine_contractions 1488 non-null float64

4 light_decelerations 1488 non-null float64

5 severe_decelerations 1488 non-null float64

6 prolongued_decelerations 1488 non-null float64

7 abnormal_short_term_variability 1488 non-null float64

8 mean_value_of_short_term_variability 1488 non-null float64

9 percentage_of_time_with_abnormal_long_term_variability 1488 non-null float64

10 mean_value_of_long_term_variability 1488 non-null float64

11 histogram_width 1488 non-null float64

12 histogram_min 1488 non-null float64

13 histogram_max 1488 non-null float64

14 histogram_number_of_peaks 1488 non-null float64

15 histogram_number_of_zeroes 1488 non-null float64

16 histogram_mode 1488 non-null float64

17 histogram_mean 1488 non-null float64

18 histogram_median 1488 non-null float64

19 histogram_variance 1488 non-null float64

20 histogram_tendency 1488 non-null float64

21 fetal_health 1488 non-null float64

dtypes: float64(22)

memory usage: 307.4 KB

train.describe().T

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| baseline value | 1488.0 | 133.290995 | 9.883484 | 106.0 | 126.000 | 133.000 | 141.000 | 160.000 |

| accelerations | 1488.0 | 0.003185 | 0.003862 | 0.0 | 0.000 | 0.002 | 0.006 | 0.018 |

| fetal_movement | 1488.0 | 0.008259 | 0.042758 | 0.0 | 0.000 | 0.000 | 0.003 | 0.481 |

| uterine_contractions | 1488.0 | 0.004313 | 0.002934 | 0.0 | 0.002 | 0.004 | 0.006 | 0.014 |

| light_decelerations | 1488.0 | 0.001862 | 0.002961 | 0.0 | 0.000 | 0.000 | 0.003 | 0.015 |

| severe_decelerations | 1488.0 | 0.000003 | 0.000052 | 0.0 | 0.000 | 0.000 | 0.000 | 0.001 |

| prolongued_decelerations | 1488.0 | 0.000141 | 0.000535 | 0.0 | 0.000 | 0.000 | 0.000 | 0.005 |

| abnormal_short_term_variability | 1488.0 | 47.096102 | 17.085395 | 12.0 | 32.000 | 49.000 | 61.000 | 87.000 |

| mean_value_of_short_term_variability | 1488.0 | 1.328831 | 0.886662 | 0.2 | 0.700 | 1.200 | 1.700 | 7.000 |

| percentage_of_time_with_abnormal_long_term_variability | 1488.0 | 9.983871 | 18.577807 | 0.0 | 0.000 | 0.000 | 11.000 | 91.000 |

| mean_value_of_long_term_variability | 1488.0 | 8.246438 | 5.693201 | 0.0 | 4.600 | 7.500 | 10.900 | 50.700 |

| histogram_width | 1488.0 | 69.969758 | 39.372667 | 3.0 | 36.000 | 67.000 | 100.250 | 176.000 |

| histogram_min | 1488.0 | 93.774194 | 29.700954 | 50.0 | 67.000 | 93.000 | 120.000 | 155.000 |

| histogram_max | 1488.0 | 163.743952 | 18.140627 | 122.0 | 151.000 | 162.000 | 174.000 | 238.000 |

| histogram_number_of_peaks | 1488.0 | 4.040995 | 3.005767 | 0.0 | 2.000 | 3.000 | 6.000 | 18.000 |

| histogram_number_of_zeroes | 1488.0 | 0.327957 | 0.753364 | 0.0 | 0.000 | 0.000 | 0.000 | 10.000 |

| histogram_mode | 1488.0 | 137.543683 | 16.182615 | 60.0 | 129.000 | 139.000 | 148.000 | 187.000 |

| histogram_mean | 1488.0 | 134.691532 | 15.432030 | 73.0 | 125.000 | 136.000 | 145.000 | 180.000 |

| histogram_median | 1488.0 | 138.201613 | 14.269137 | 79.0 | 129.000 | 139.000 | 148.000 | 183.000 |

| histogram_variance | 1488.0 | 18.342742 | 28.499592 | 0.0 | 2.000 | 7.000 | 23.000 | 269.000 |

| histogram_tendency | 1488.0 | 0.324597 | 0.603854 | -1.0 | 0.000 | 0.000 | 1.000 | 1.000 |

| fetal_health | 1488.0 | 1.301747 | 0.609009 | 1.0 | 1.000 | 1.000 | 1.000 | 3.000 |

Visualization

# Definition of a function to visualize correlation between variables

import seaborn as sn

import matplotlib.pyplot as plt

def plot_correlation(df):

corr_matrix = df.corr()

heat_map = sn.heatmap(corr_matrix, annot=False)

plt.show(heat_map)

Lets visualize first and comprehend the meaning behind our target variable, fetal_health

train.groupby(['fetal_health']).count().plot(kind='pie', y='accelerations', autopct='%1.1f%%',

startangle=90, labels=['Normal', 'Suspect', 'Pathological'], figsize=(7, 7))

<matplotlib.axes._subplots.AxesSubplot at 0x2a5c37cdb20>

In the chart below we can see the correlation between fetal health and the rest of the variables

plot_correlation(train)

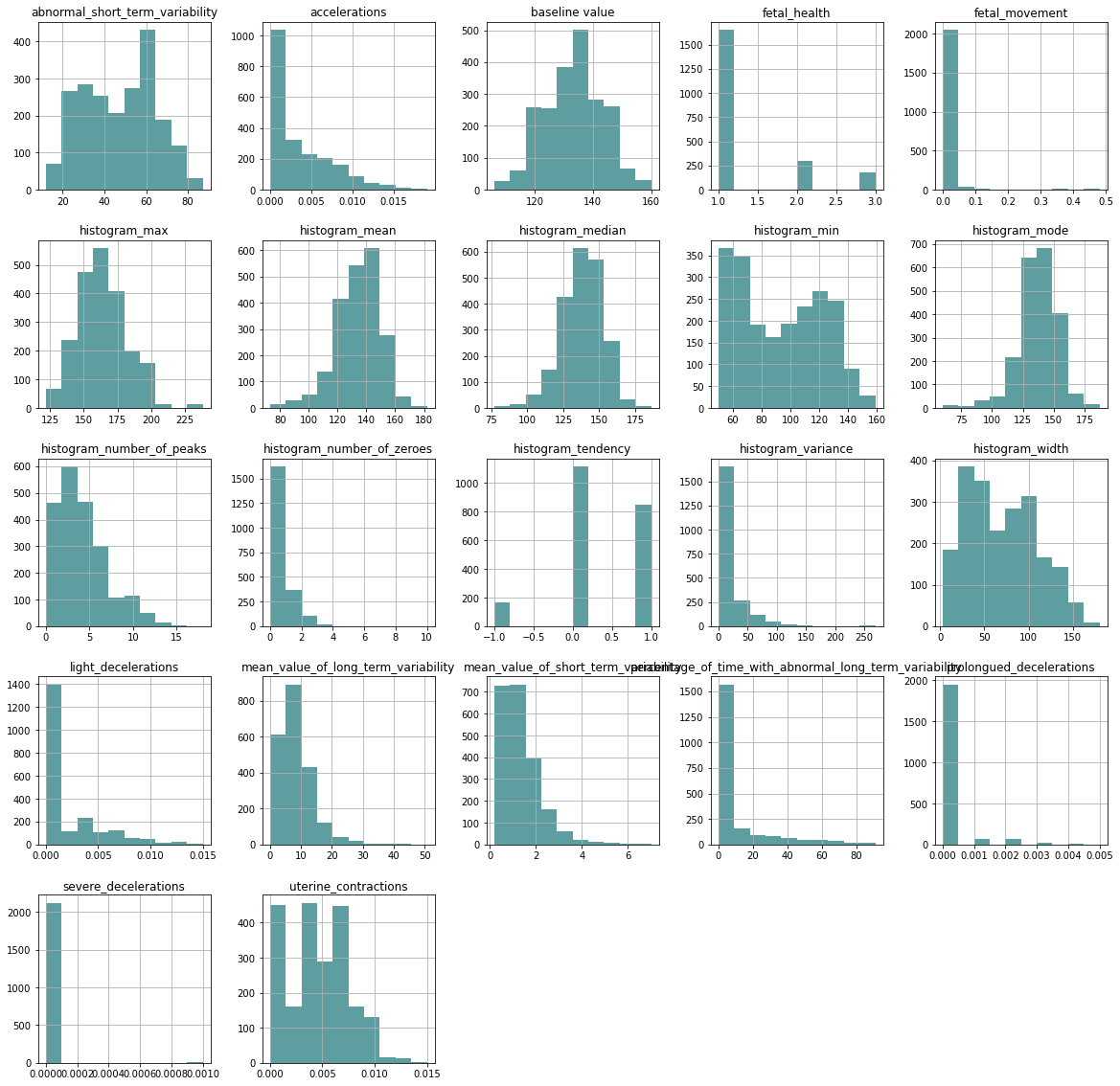

In the following comparison, we can see the distribution of data between each variable and fetal health

data_hist_plot = data.hist(figsize = (20,20), color = "#5F9EA0")

Data Cleaning

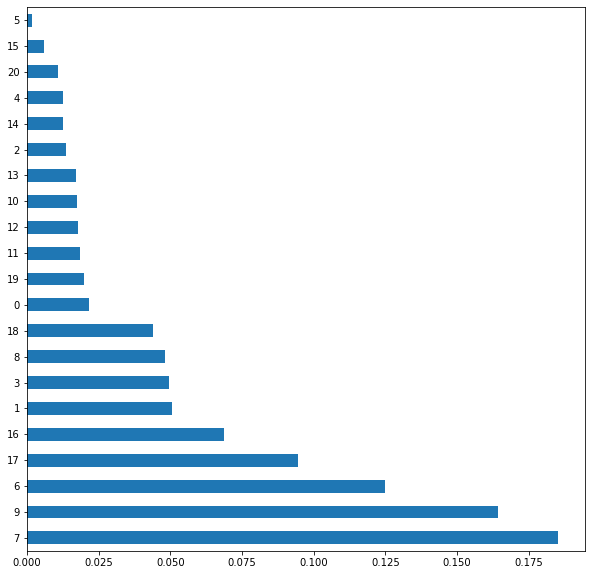

we will clean the data set of variables that contribute noise to the machine learning algorithm

from sklearn.ensemble import ExtraTreesRegressor

import matplotlib.pyplot as plt

etr_model = ExtraTreesRegressor()

etr_model.fit(X_train, y_train)

feat_importances = pd.Series(etr_model.feature_importances_)

feat_importances.nlargest(30).plot(kind='barh', figsize=(10, 10))

plt.show()

selected_features = etr_model.feature_importances_

selected = [index for index in range(

selected_features.size) if selected_features[index] >= 0.06]

X_train = X_train.iloc[:, selected]

X_test = X_test.iloc[:, selected]

Data Transforming

Now it is time to normalize data, in this case we used MinMaxScaler with a range of 0-1

from sklearn.preprocessing import MinMaxScaler

from sklearn.compose import ColumnTransformer

dif_values = y_train.unique()

# Now we create the transformers

t_norm = ("normalizer", MinMaxScaler(feature_range=(0, 1)), X_train.columns)

column_transformer_X = ColumnTransformer(

transformers=[t_norm], remainder='passthrough')

column_transformer_X.fit(X_train);

X_train = column_transformer_X.transform(X_train)

X_test = column_transformer_X.transform(X_test)

Modeling and Hyperparameter tunning

We will run serveral grid search (some of them randomized to optimize time/performance efficiency)

from sklearn.metrics import make_scorer

from sklearn.pipeline import make_pipeline

from sklearn.pipeline import Pipeline

from sklearn.metrics import accuracy_score

from sklearn.metrics import classification_report

from lightgbm import LGBMClassifier

from sklearn import tree

from sklearn.ensemble import GradientBoostingClassifier

X_val_train, X_val_test, y_val_train, y_val_test = train_test_split(

X_train, y_train, test_size=0.20, random_state=123)

scorer = make_scorer(accuracy_score, greater_is_better=True)

Decision Tree Classifier

pipe_tree = tree.DecisionTreeClassifier(random_state=123)

criteria = ['gini', 'entropy']

splitter = ['best', 'random']

max_depth = [int(x) for x in np.linspace(10, 110, num=22)]

max_features = ['auto', 'sqrt', 'log2', None]

min_samples_split = [2, 5, 10]

min_samples_leaf = [1, 2, 4]

param_grid_tree = {'max_features': max_features,

'max_depth': max_depth,

'min_samples_split': min_samples_split,

'min_samples_leaf': min_samples_leaf,

'criterion': criteria,

'splitter': splitter

}

gs_tree = GridSearchCV(estimator=pipe_tree, param_grid=param_grid_tree,

cv=10, verbose=2, n_jobs=-1)

best_tree = gs_tree.fit(X_val_train, y_val_train)

Fitting 10 folds for each of 3168 candidates, totalling 31680 fits

[Parallel(n_jobs=-1)]: Using backend LokyBackend with 12 concurrent workers.

[Parallel(n_jobs=-1)]: Done 17 tasks | elapsed: 1.3s

[Parallel(n_jobs=-1)]: Done 492 tasks | elapsed: 1.8s

[Parallel(n_jobs=-1)]: Done 20620 tasks | elapsed: 7.3s

[Parallel(n_jobs=-1)]: Done 31680 out of 31680 | elapsed: 9.8s finished

print(classification_report(y_val_test,best_tree.predict(X_val_test)))

precision recall f1-score support

1.0 0.95 0.96 0.96 240

2.0 0.78 0.73 0.75 44

3.0 0.69 0.79 0.73 14

accuracy 0.92 298

macro avg 0.81 0.82 0.81 298

weighted avg 0.92 0.92 0.92 298

print(best_tree.best_params_)

{'criterion': 'gini', 'max_depth': 10, 'max_features': 'auto', 'min_samples_leaf': 2, 'min_samples_split': 5, 'splitter': 'best'}

Logistic regression

pipe_lr = LogisticRegression()

penalty = ['l1', 'l2', 'elasticnet', 'none']

dual = [True, False]

c = np.linspace(0.0001, 10000, num=10)

tol = np.linspace(1e-4, 1e-2, num=10)

fit_intercept = [True, False]

class_weight = ['balanced', None]

solver = ['newton-cg', 'lbfgs', 'liblinear', 'sag', 'saga']

multi_class = ['auto', 'ovr', 'multinomial']

param_grid_lr = {'penalty': penalty,

'dual': dual,

'C': c,

'tol': tol,

'fit_intercept': fit_intercept,

'class_weight': class_weight,

'solver': solver,

'multi_class': multi_class}

gs_lr = GridSearchCV(estimator=pipe_lr, param_grid=param_grid_lr,

cv=10, verbose=2, n_jobs=-1)

gs_lr.fit(X_val_train, y_val_train)

[Parallel(n_jobs=-1)]: Using backend LokyBackend with 12 concurrent workers.

[Parallel(n_jobs=-1)]: Done 17 tasks | elapsed: 0.0s

Fitting 10 folds for each of 48000 candidates, totalling 480000 fits

[Parallel(n_jobs=-1)]: Done 1320 tasks | elapsed: 0.6s

[Parallel(n_jobs=-1)]: Done 14120 tasks | elapsed: 3.8s

[Parallel(n_jobs=-1)]: Done 32232 tasks | elapsed: 12.4s

[Parallel(n_jobs=-1)]: Done 55592 tasks | elapsed: 22.0s

[Parallel(n_jobs=-1)]: Done 73224 tasks | elapsed: 37.5s

[Parallel(n_jobs=-1)]: Done 98172 tasks | elapsed: 52.0s

[Parallel(n_jobs=-1)]: Done 122700 tasks | elapsed: 1.2min

[Parallel(n_jobs=-1)]: Done 164280 tasks | elapsed: 1.6min

[Parallel(n_jobs=-1)]: Done 201336 tasks | elapsed: 2.0min

[Parallel(n_jobs=-1)]: Done 254874 tasks | elapsed: 2.6min

[Parallel(n_jobs=-1)]: Done 298032 tasks | elapsed: 3.0min

[Parallel(n_jobs=-1)]: Done 353130 tasks | elapsed: 3.7min

[Parallel(n_jobs=-1)]: Done 406570 tasks | elapsed: 4.3min

[Parallel(n_jobs=-1)]: Done 469368 tasks | elapsed: 4.9min

[Parallel(n_jobs=-1)]: Done 480000 out of 480000 | elapsed: 5.1min finished

GridSearchCV(cv=10, estimator=LogisticRegression(), n_jobs=-1,

param_grid={'C': array([1.0000000e-04, 1.1111112e+03, 2.2222223e+03, 3.3333334e+03,

4.4444445e+03, 5.5555556e+03, 6.6666667e+03, 7.7777778e+03,

8.8888889e+03, 1.0000000e+04]),

'class_weight': ['balanced', None],

'dual': [True, False], 'fit_intercept': [True, False],

'multi_class': ['auto', 'ovr', 'multinomial'],

'penalty': ['l1', 'l2', 'elasticnet', 'none'],

'solver': ['newton-cg', 'lbfgs', 'liblinear', 'sag',

'saga'],

'tol': array([0.0001, 0.0012, 0.0023, 0.0034, 0.0045, 0.0056, 0.0067, 0.0078,

0.0089, 0.01 ])},

verbose=2)

print(classification_report(gs_lr.best_estimator_.predict(X_val_test), y_val_test))

precision recall f1-score support

1.0 0.95 0.93 0.94 247

2.0 0.57 0.74 0.64 34

3.0 0.86 0.71 0.77 17

accuracy 0.89 298

macro avg 0.79 0.79 0.79 298

weighted avg 0.90 0.89 0.90 298

print(gs_lr.best_params_)

{'C': 5555.555600000001, 'class_weight': None, 'dual': False, 'fit_intercept': True, 'multi_class': 'multinomial', 'penalty': 'none', 'solver': 'sag', 'tol': 0.0089}

Gradient Boosting Classifier

pipe_gbc = GradientBoostingClassifier(random_state=123)

learning_rate = np.linspace(0.001, 1, num=10)

n_estimators = [50, 100, 200, 500]

criteria = ['friedman_mse', 'mse', 'mae']

min_samples_split = np.linspace(0, 20, num=10, dtype=int)

min_samples_leaf = np.linspace(1e-2, 0.5, num=10)

np.append(min_samples_leaf, int(1))

min_weight_fraction_leaf = [0, 1]

max_depth = np.arange(1, 21, 2)

max_features = ['auto', 'sqrt', 'log2']

param_grid_gbc = {'learning_rate': learning_rate,

'n_estimators': n_estimators,

'criterion': criteria,

'min_samples_split': min_samples_split,

'min_samples_leaf': min_samples_leaf,

'min_weight_fraction_leaf': min_weight_fraction_leaf,

'max_depth': max_depth,

'max_features': max_features}

gs_gbc = RandomizedSearchCV(estimator=pipe_gbc, param_distributions=param_grid_gbc, n_iter=350,

cv=10, verbose=2, n_jobs=-1)

gs_gbc.fit(X_val_train, y_val_train)

Fitting 10 folds for each of 350 candidates, totalling 3500 fits

[Parallel(n_jobs=-1)]: Using backend LokyBackend with 12 concurrent workers.

[Parallel(n_jobs=-1)]: Done 17 tasks | elapsed: 2.7s

[Parallel(n_jobs=-1)]: Done 138 tasks | elapsed: 17.9s

[Parallel(n_jobs=-1)]: Done 341 tasks | elapsed: 50.2s

[Parallel(n_jobs=-1)]: Done 788 tasks | elapsed: 1.2min

[Parallel(n_jobs=-1)]: Done 1472 tasks | elapsed: 2.1min

[Parallel(n_jobs=-1)]: Done 2141 tasks | elapsed: 3.5min

[Parallel(n_jobs=-1)]: Done 3012 tasks | elapsed: 4.9min

[Parallel(n_jobs=-1)]: Done 3500 out of 3500 | elapsed: 5.8min finished

RandomizedSearchCV(cv=10,

estimator=GradientBoostingClassifier(random_state=123),

n_iter=350, n_jobs=-1,

param_distributions={'criterion': ['friedman_mse', 'mse',

'mae'],

'learning_rate': array([0.001, 0.112, 0.223, 0.334, 0.445, 0.556, 0.667, 0.778, 0.889,

1. ]),

'max_depth': array([ 1, 3, 5, 7, 9, 11, 13, 15, 17, 19]),

'max_features': ['auto', 'sqrt',

'log2'],

'min_samples_leaf': array([0.01 , 0.06444444, 0.11888889, 0.17333333, 0.22777778,

0.28222222, 0.33666667, 0.39111111, 0.44555556, 0.5 ]),

'min_samples_split': array([ 0, 2, 4, 6, 8, 11, 13, 15, 17, 20]),

'min_weight_fraction_leaf': [0, 1],

'n_estimators': [50, 100, 200, 500]},

verbose=2)

print(classification_report(y_val_test, gs_gbc.predict(X_val_test)))

precision recall f1-score support

1.0 0.96 0.95 0.96 240

2.0 0.77 0.75 0.76 44

3.0 0.76 0.93 0.84 14

accuracy 0.92 298

macro avg 0.83 0.88 0.85 298

weighted avg 0.92 0.92 0.92 298

print(gs_gbc.best_params_)

{'n_estimators': 50, 'min_weight_fraction_leaf': 0, 'min_samples_split': 11, 'min_samples_leaf': 0.01, 'max_features': 'auto', 'max_depth': 3, 'learning_rate': 0.223, 'criterion': 'friedman_mse'}

LightGBM Classifier

pipe_light = LGBMClassifier(random_state=123)

n_estimators = [int(x) for x in np.linspace(start=200, stop=2000, num=10)]

max_features = ['auto', 'sqrt']

max_depth = [int(x) for x in np.linspace(10, 110, num=11)]

bootstrap = [True, False]

param_grid_light = {'n_estimators': n_estimators,

'max_features': max_features,

'max_depth': max_depth,

'bootstrap': bootstrap

}

gs_light = GridSearchCV(estimator=pipe_light, param_grid=param_grid_light, cv=5, verbose=2, n_jobs=-1)

gs_light.fit(X_val_train, y_val_train);

Fitting 5 folds for each of 440 candidates, totalling 2200 fits

[Parallel(n_jobs=-1)]: Using backend LokyBackend with 12 concurrent workers.

[Parallel(n_jobs=-1)]: Done 17 tasks | elapsed: 1.3s

[Parallel(n_jobs=-1)]: Done 138 tasks | elapsed: 11.8s

[Parallel(n_jobs=-1)]: Done 341 tasks | elapsed: 28.8s

[Parallel(n_jobs=-1)]: Done 624 tasks | elapsed: 52.2s

[Parallel(n_jobs=-1)]: Done 989 tasks | elapsed: 1.4min

[Parallel(n_jobs=-1)]: Done 1434 tasks | elapsed: 2.0min

[Parallel(n_jobs=-1)]: Done 1961 tasks | elapsed: 2.7min

[Parallel(n_jobs=-1)]: Done 2200 out of 2200 | elapsed: 3.1min finished

[LightGBM] [Warning] Unknown parameter: bootstrap

[LightGBM] [Warning] Unknown parameter: max_features

[LightGBM] [Warning] Accuracy may be bad since you didn't explicitly set num_leaves OR 2^max_depth > num_leaves. (num_leaves=31).

print(classification_report(y_val_test, gs_light.predict(X_val_test)))

precision recall f1-score support

1.0 0.95 0.96 0.96 240

2.0 0.79 0.70 0.75 44

3.0 0.72 0.93 0.81 14

accuracy 0.92 298

macro avg 0.82 0.86 0.84 298

weighted avg 0.92 0.92 0.92 298

print(gs_light.best_params_)

{'bootstrap': True, 'max_depth': 30, 'max_features': 'auto', 'n_estimators': 200}

Random Forest

n_estimators = [int(x) for x in np.linspace(start=200, stop=2000, num=10)]

max_features = ['auto', 'sqrt']

max_depth = [int(x) for x in np.linspace(10, 110, num=11)]

min_samples_split = [2, 5, 10]

min_samples_leaf = [1, 2, 4]

bootstrap = [True, False]

random_grid = {'n_estimators': n_estimators,

'max_features': max_features,

'max_depth': max_depth,

'min_samples_split': min_samples_split,

'min_samples_leaf': min_samples_leaf,

'bootstrap': bootstrap}

rf = RandomForestClassifier(random_state=123)

gs_rf = RandomizedSearchCV(estimator=rf, param_distributions=random_grid,

n_iter=100, cv=10, verbose=2, random_state=123, n_jobs=-1)

gs_rf.fit(X_val_train, y_val_train);

Fitting 10 folds for each of 100 candidates, totalling 1000 fits

[Parallel(n_jobs=-1)]: Using backend LokyBackend with 12 concurrent workers.

[Parallel(n_jobs=-1)]: Done 17 tasks | elapsed: 7.4s

[Parallel(n_jobs=-1)]: Done 138 tasks | elapsed: 33.3s

[Parallel(n_jobs=-1)]: Done 341 tasks | elapsed: 1.2min

[Parallel(n_jobs=-1)]: Done 624 tasks | elapsed: 2.0min

[Parallel(n_jobs=-1)]: Done 1000 out of 1000 | elapsed: 3.2min finished

print(classification_report(y_val_test, gs_rf.predict(X_val_test)))

precision recall f1-score support

1.0 0.95 0.97 0.96 240

2.0 0.84 0.70 0.77 44

3.0 0.87 0.93 0.90 14

accuracy 0.93 298

macro avg 0.88 0.87 0.87 298

weighted avg 0.93 0.93 0.93 298

print(gs_rf.best_params_)

{'n_estimators': 800, 'min_samples_split': 5, 'min_samples_leaf': 2, 'max_features': 'auto', 'max_depth': 10, 'bootstrap': True}

Modeling Conclusion

We concluded that random forest was the model that had the best performance overall.

Unsupervised training

Now that we found our best model, we will try adding some clusters that could help classify easier our target variables.

from sklearn.cluster import KMeans

kmeans = KMeans(

n_clusters=3,

random_state=123

).fit(X_val_train)

test_cluster = kmeans.predict(X_val_test)

X_val_train = np.append(X_val_train, np.expand_dims(kmeans.labels_, axis=1), axis=1)

X_val_test = np.append(X_val_test, np.expand_dims(test_cluster, axis=1), axis=1)

best_rf = rf = RandomForestClassifier(random_state=123, n_estimators=800, min_samples_split=5,

min_samples_leaf=2, max_features='auto', max_depth=10, bootstrap=True)

best_rf.fit(X_val_train, y_val_train)

RandomForestClassifier(max_depth=10, min_samples_leaf=2, min_samples_split=5,

n_estimators=800, random_state=123)

print(classification_report(y_val_test,best_rf.predict(X_val_test)))

precision recall f1-score support

1.0 0.95 0.97 0.96 240

2.0 0.84 0.70 0.77 44

3.0 0.87 0.93 0.90 14

accuracy 0.93 298

macro avg 0.88 0.87 0.87 298

weighted avg 0.93 0.93 0.93 298

it seems that no metrics changed, so we will not include the clusters to avoid any noise in the algorithm

Evaluation

We will recreate the best model, but this time we will train it with all the data from the train set.

best_rf = rf = RandomForestClassifier(random_state=123, n_estimators=800, min_samples_split=5,

min_samples_leaf=2, max_features='auto', max_depth=10, bootstrap=True)

best_rf.fit(X_train, y_train)

RandomForestClassifier(max_depth=10, min_samples_leaf=2, min_samples_split=5,

n_estimators=800, random_state=123)

print(classification_report(y_test,best_rf.predict(X_test)))

precision recall f1-score support

1.0 0.95 0.95 0.95 497

2.0 0.76 0.74 0.75 84

3.0 0.83 0.86 0.84 57

accuracy 0.92 638

macro avg 0.85 0.85 0.85 638

weighted avg 0.92 0.92 0.92 638

Conclusion

We have developed a tool that predicts with 92% accuracy in which category we would place the health of a fetus. Since our goal was set to reach 90%, we can conclude that this project was successfully resolved.